Galileo

Footballguy

A New York attorney submitted fake legal research to the court generated with ChatGPT. At least 6 cases cited for precedence were not legitimate court cases.

Great TED on the current status of this fast moving train: https://youtu.be/C_78DM8fG6E

a) how in the world do you think no one will notice this?A New York attorney submitted fake legal research to the court generated with ChatGPT. At least 6 cases cited for precedence were not legitimate court cases.

a) how in the world do you think no one will notice this?A New York attorney submitted fake legal research to the court generated with ChatGPT. At least 6 cases cited for precedence were not legitimate court cases.

b) disbar him and behind-bars him for long enough to get everyone's attention.

I'm pondering whether this is better or worse, and I don't think I know!Assuming you believe him, it seems he trusted ChatGPT to provide accurate and real cases

I'm pondering whether this is better or worse, and I don't think I know!Assuming you believe him, it seems he trusted ChatGPT to provide accurate and real cases

.

.A New York attorney submitted fake legal research to the court generated with ChatGPT. At least 6 cases cited for precedence were not legitimate court cases.

Dang. Gonna go check out the jokes on Twitter

Great TED on the current status of this fast moving train: https://youtu.be/C_78DM8fG6E

Thanks. What did you feel were the main takeaways from this?

On the lighter side here's AI-generated Hank Williams singing "Straight Outta Compton": https://www.youtube.com/watch?v=2Jh7Jk3aSlo

"fellers with attitudes"

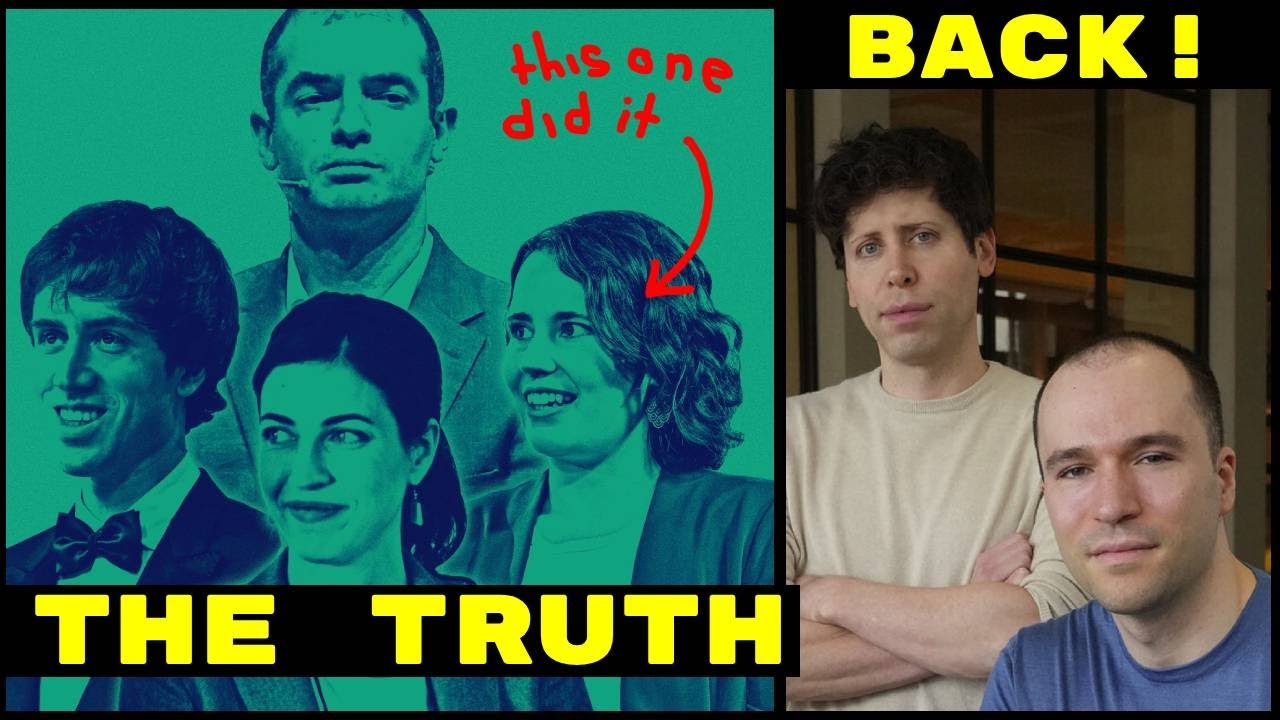

I've read a few articles on this and I still don't understand. Is him being brought back a good or bad thing for AI safety?Coming out now that the firing might have been related to a big breakthrough in AGI: https://www.reuters.com/technology/...etter-board-about-ai-breakthrough-2023-11-22/.

I think that question is impossible to answer. Nobody knows what AI is going to develop into in the future so opinions on safety are hard to judge. I think an easier question to answer is "Does Sam Altman put revenue ahead of safety?" I can't really answer that question either, but my guess is that he does not.I've read a few articles on this and I still don't understand. Is him being brought back a good or bad thing for AI safety?Coming out now that the firing might have been related to a big breakthrough in AGI: https://www.reuters.com/technology/...etter-board-about-ai-breakthrough-2023-11-22/.

I think that question is impossible to answer. Nobody knows what AI is going to develop into in the future so opinions on safety are hard to judge. I think an easier question to answer is "Does Sam Altman put revenue ahead of safety?" I can't really answer that question either, but my guess is that he does not.I've read a few articles on this and I still don't understand. Is him being brought back a good or bad thing for AI safety?Coming out now that the firing might have been related to a big breakthrough in AGI: https://www.reuters.com/technology/...etter-board-about-ai-breakthrough-2023-11-22/.

One of the concerns for AI safety is a fast takeoff. A fast takeoff is one where we develop AGI and then it quickly evolves from human level intelligence into super-human level intelligence. This process could be days. And it is possible that it evolves too fast for humans to control. In this Lex Friedman podcast, Altman says that he thinks a slow takeoff that starts now is the safest path for AGI. My understanding is that a fast takeoff is much more likely the longer we wait to develop AGI, as computers will be more powerful at that time (eg. quantum computers could be pretty standard). He goes on to say that they are making decisions to maximise the chance of a "slow takeoff, starting as soon as possible" scenario. So I think that Altman is doing what he thinks is right to for AI safety but there are lots of people that disagree.

Why do you think that? I was definitely in that camp not so long ago, but it is astonishing how far AI has come in the past 10 years. I would never have believed you if you told me 5 years ago that in 2023 we would have an AI that could pass the Turing test and pass the bar exam. But totally possibly that getting this far is the easy part.I think that question is impossible to answer. Nobody knows what AI is going to develop into in the future so opinions on safety are hard to judge. I think an easier question to answer is "Does Sam Altman put revenue ahead of safety?" I can't really answer that question either, but my guess is that he does not.I've read a few articles on this and I still don't understand. Is him being brought back a good or bad thing for AI safety?Coming out now that the firing might have been related to a big breakthrough in AGI: https://www.reuters.com/technology/...etter-board-about-ai-breakthrough-2023-11-22/.

One of the concerns for AI safety is a fast takeoff. A fast takeoff is one where we develop AGI and then it quickly evolves from human level intelligence into super-human level intelligence. This process could be days. And it is possible that it evolves too fast for humans to control. In this Lex Friedman podcast, Altman says that he thinks a slow takeoff that starts now is the safest path for AGI. My understanding is that a fast takeoff is much more likely the longer we wait to develop AGI, as computers will be more powerful at that time (eg. quantum computers could be pretty standard). He goes on to say that they are making decisions to maximise the chance of a "slow takeoff, starting as soon as possible" scenario. So I think that Altman is doing what he thinks is right to for AI safety but there are lots of people that disagree.

I do not believe they are close to AGI, it is still decades away ala fusion.

Everything I read about with AI, this is in the back of my mind.Nobody knows what AI is going to develop into in the future

Most impressive. Assuming it actually works like that and that's not just a fake marketing video. It's insane how far this technology has come over the last 5 years. This is the type of demo that makes me think that AGI is possible in the next 5-10 years.

As suspected, this is just a marketing video. They used still images and then narrated the prompts after. https://twitter.com/parmy/status/1732811357068615969?t=f8dfX4THfjkPhLINZC5GgQ

Air Canada came under further criticism for later attempting to distance itself from the error by claiming that the bot was “responsible for its own actions”

Air Canada argued that despite the error, the chatbot was a “separate legal entity” and thus was responsible for its actions.

Thanks for sharing. I tried uploading my PPT template, but the slides it made were just blank and didn't look real good. Do you know if you pay and download your slide deck if it then includes the uploaded template?Slidesgpt is an additional application for AI to create PowerPoints powered by ChatGPT.

I haven't tried that yet, sorry. I think it's something like $2.50 to download so maybe it's worth paying just to experiment. I'll give it a go possibly sometime today.Thanks for sharing. I tried uploading my PPT template, but the slides it made were just blank and didn't look real good. Do you know if you pay and download your slide deck if it then includes the uploaded template?Slidesgpt is an additional application for AI to create PowerPoints powered by ChatGPT.

I’m using it for work to create structure and to give a built out first draft. I then go and do my edits and fine tune. This stuff really does a lot of heavy lifting for me.You guys are only uploading personal stuff, I assume? Not asking for GPT to synthesize and reformat work product, right?

Our organization is piloting the types of things Andy Dufresne is referencing here, using Microsoft Copilot among other vendors (I think), but everything stays in house. ChatGPT would hang on to that information/data and learn from it, both of which would pose security concerns around proprietary info, I would think, in the corporate realm.

This is very interesting to me. Has your company addressed the use of ChatGPT or other external LLM systems for use in your work? Have they explicitly condoned it or forbade it? Is the data and context you provide or the output you mention something that you would willingly share with a competitor, if you were asked?I’m using it for work to create structure and to give a built out first draft. I then go and do my edits and fine tune. This stuff really does a lot of heavy lifting for me.You guys are only uploading personal stuff, I assume? Not asking for GPT to synthesize and reformat work product, right?

Our organization is piloting the types of things Andy Dufresne is referencing here, using Microsoft Copilot among other vendors (I think), but everything stays in house. ChatGPT would hang on to that information/data and learn from it, both of which would pose security concerns around proprietary info, I would think, in the corporate realm.

The insurance company I'm soon leaving has an internal GPT that it has condoned for use. I haven't really used that but I know there are restrictions on what data you can/can't use.This is very interesting to me. Has your company addressed the use of ChatGPT or other external LLM systems for use in your work? Have they explicitly condoned it or forbade it? Is the data and context you provide or the output you mention something that you would willingly share with a competitor, if you were asked?I’m using it for work to create structure and to give a built out first draft. I then go and do my edits and fine tune. This stuff really does a lot of heavy lifting for me.You guys are only uploading personal stuff, I assume? Not asking for GPT to synthesize and reformat work product, right?

Our organization is piloting the types of things Andy Dufresne is referencing here, using Microsoft Copilot among other vendors (I think), but everything stays in house. ChatGPT would hang on to that information/data and learn from it, both of which would pose security concerns around proprietary info, I would think, in the corporate realm.

Just trying to get a bead on how confidential or proprietary the first draft items are in your case without getting into too much specificity.

It’s encouraged and paid for. We also have built our own generative AI search and answer engine into our SaaS platform.This is very interesting to me. Has your company addressed the use of ChatGPT or other external LLM systems for use in your work? Have they explicitly condoned it or forbade it? Is the data and context you provide or the output you mention something that you would willingly share with a competitor, if you were asked?I’m using it for work to create structure and to give a built out first draft. I then go and do my edits and fine tune. This stuff really does a lot of heavy lifting for me.You guys are only uploading personal stuff, I assume? Not asking for GPT to synthesize and reformat work product, right?

Our organization is piloting the types of things Andy Dufresne is referencing here, using Microsoft Copilot among other vendors (I think), but everything stays in house. ChatGPT would hang on to that information/data and learn from it, both of which would pose security concerns around proprietary info, I would think, in the corporate realm.

Just trying to get a bead on how confidential or proprietary the first draft items are in your case without getting into too much specificity.

“Compose an email that makes it appear that I’ve read @Andy Dufresne ’s boring PowerPoint slides. And randomly insert one of the ten most common misspellings in the body so it appears I wrote this.”I'm just getting started but have already learned a lot.

The $20/month GPT Plus subscription gets you access to some pretty great features. The best thing I like is its ability to upload and/or create documents. Some simple examples:

"from the text outline in the document I've uploaded, create a 5 page PowerPoint "

"From the PDF I've uploaded, write a three paragraph summary"

"From the uploaded spreadsheet, create three different graphical visualizations"

"From the zipped file containing photos, choose the ten best and insert them into a presentation"

wow...and a lot of this content is from six years ago.This YouTube playlist presents the best explanation I've seen of how Large Language Models (LLMs) work.

3Blue1Brown's YouTube Playlist on Neural Networks and LLMs

The channel, 3Blue1Brown, is known for making complex math and tech concepts easy to understand with explanatory visuals. This particular series covers neural networks, backpropagation, and transformers -- all key features of LLMs.